Music, Tea, and P-Values: A Tale of Impossible Results and P-Hacking

In 2011, a fascinating study claimed that listening to specific songs can make you younger. This surprising assertion grasped the interest of many. However, looking closely, it paints a broader and deeper picture of an intriguing yet often misunderstood concept in statistical analysis: the p-value.

Introduction About Music Making People Younger

The study, fascinating as it was, brought together actual participants and reported factual data. In the experiment, three groups of participants were each played a different song, and the researchers meticulously collected various information about them. The published analysis showed that participants who heard one song were 1.5 years younger than those who listened to the other song. This remarkable result was determined with a p-value of 0.04, generally considered significant in many scientific disciplines.

However, this study was different from what it appeared at first glance.

Highlights

- A 2011 Study Claimed Music Could Make You Younger Using P-Values

- The Experiment Was Based on P-Value, Known as Practice Called P-Hacking

- P-Hacking Tests Hypotheses Until Statistical Significance Is Achieved

- P-Hacking Can Lead to Absurd Results in Scientific Research Studies

- False Positives from P-Hacking Mislead Researchers and the Public

Methodology of the Study and its Statistical Flaws

The fundamental aim of the music study was to point out how p-values could be misused. Researchers split the participants into three groups in the methodology, but the published analysis only included two. Furthermore, they controlled for variations in baseline age across participants by only using the participants’ fathers’ ages. This odd methodological choice and selective reporting are critical indicators of statistical flaws.

But it doesn’t stop there.

Researchers also intermittently paused the experiment after every ten participants. If the p-value was above 0.05, the research continued. Still, they halted the experiment when the p-value fell below 0.05. This practice is an explicit example of manipulating the research process to attain a statistically significant result — a strategy known as p-hacking.

Introduction to P-hacking

P-hacking is a term that emerged from the advent of complex data analysis tools. It involves continually testing different hypotheses until a statistically significant result is achieved. In the context of the music study, researchers conducted an array of tests. They chose to present the one that demonstrated statistical significance. This practice is akin to throwing darts until you hit a bullseye, then claiming you only threw the dart that hit the bullseye.

This study’s looking glass shows how p-hacking morphs an otherwise simple experiment into an outlandishly impossible result. This phenomenon is problematic because it skews the research output toward desired results, pushing scientific research away from its core ethos of unbiased discovery.

Consequences of P-hacking and False Positives

The music study provides a clear example of the implications of p-hacking, manifesting as an absurd conclusion that certain songs can reduce a person’s age. P-hacking increases the rate of false positives in research, misleading other researchers and the public. False positives can waste significant resources as other researchers might try to replicate or build upon these “phantom” results, further promoting erroneous theories.

Moreover, p-hacking also impacts the credibility of scientific research. Such malpractices erode public trust in scientific studies. They can have detrimental effects on policy-making and funding for future research.

Importance of Correct Statistical Analysis

The music study vividly demonstrates the critical importance of rigorous statistical analysis in scientific research. When used correctly, p-values are a valuable tool in our statistical toolbox. They help us discern between results likely due to chance and those that signify a legitimate effect.

However, p-values are only as reliable as the methodology that produces them. Suppose the methods are compromised by practices like p-hacking. In that case, the p-value loses its reliability, and the conclusions are questionable.

Responsible scientific research requires transparency, replicability, and honest data representation. It’s not just about getting significant results; it’s about genuinely significant results.

Note: The inclusion of ‘tea’ in the title of this article refers to a famous statistical experiment known as the “Lady Tasting Tea” experiment. It serves as a simple and classic illustration of hypothesis testing and the concept of p-values in statistics. This experiment is often used as an introductory example in statistical education to highlight the principles of scientific methodology. By alluding to this experiment, we aim to establish a bridge between the complexities of the 2011 study and fundamental statistical concepts, facilitating a better understanding of p-values and the phenomenon of p-hacking.

References and Further Reading

This article delves into a complex topic with critical implications for conducting and interpreting scientific research. P-values and p-hacking are foundational in statistics and data analysis. Still, they can often be misunderstood or misused, leading to potentially misleading results.

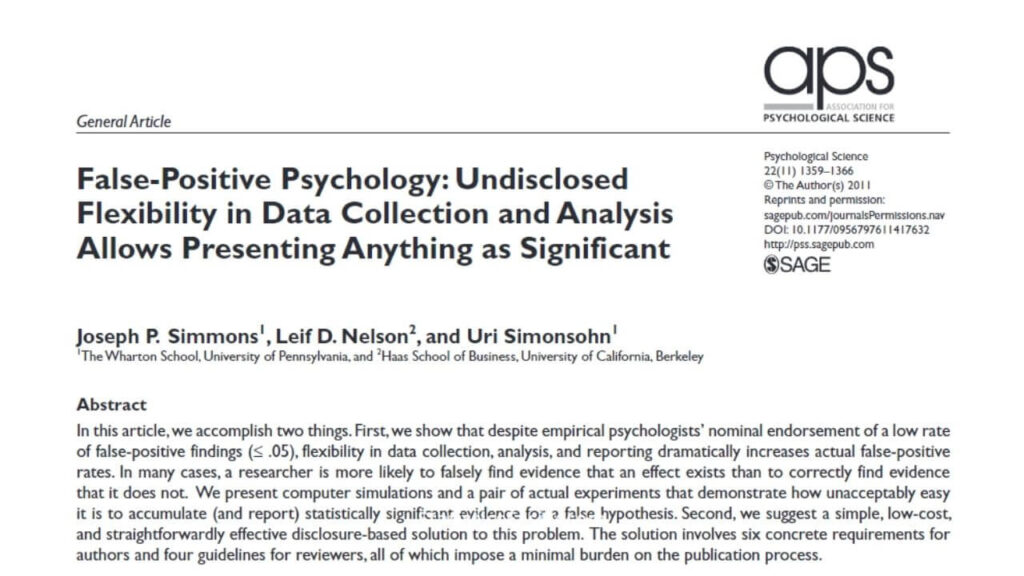

Our discussion here is inspired by and closely relates to the work of Simmons, Nelson, and Simonsohn in their 2011 article “False-Positive Psychology: Undisclosed Flexibility in Data Collection and Analysis Allows Presenting Anything as Significant.” This article thoroughly examines how flexibility in data collection, analysis, and reporting can lead to an inflated rate of false-positive findings. The authors present simulations and experiments demonstrating how disturbingly easy it is to amass and report statistically significant evidence for a false hypothesis.

Another significant resource that served as a basis for our article is the insightful TED-Ed video by James A. Smith, “The method that can ‘prove’ almost anything.” It presents these statistical concepts in a way that’s accessible and engaging, perfect for those who are new to these topics or wish to gain a deeper understanding.

To further your understanding of these topics and to see more in-depth discussion and potential solutions to these issues, we highly recommend reading the full article by Simmons, Nelson, and Simonsohn and watching the TED-Ed video by James A. Smith.

Recommended Articles

Interested in learning more about statistical analysis and its vital role in scientific research? Explore our blog for more insights and discussions on relevant topics.

- How To Lie With Statistics?

- Why ‘Statistics Are Like Bikinis’

- The ‘Lady Tasting Tea’ Experiment

- Statistics And Fake News: A Deeper Look

- P-hacking: A Hidden Threat

- What Does The P-Value Mean?

- What Does The P-Value Mean? Revisited

Frequently Asked Questions (FAQs)

Q1: What is a p-value? It’s a statistic that helps determine if the results of an experiment are statistically significant.

Q2: What is p-hacking? It’s a practice where researchers continually test different hypotheses until they achieve a statistically significant result.

Q3: How does p-hacking affect scientific research? P-hacking increases the rate of false positives in research, misleading other researchers and eroding public trust in scientific studies.

Q4: What was the goal of the 2011 music study? The goal was to demonstrate how p-values could be misused, leading to misleading and even impossible results.

Q5: Why is the misuse of p-values a concern? Misusing p-values can lead to false positives, erroneous theories, wasted resources, and decreased public trust in science.

Q6: How can we prevent p-hacking? Practices such as pre-registering a detailed plan for the experiment and analysis can help prevent p-hacking.

Q7: What is a false positive in research? A false positive is when a test result wrongly indicates the presence of a condition (such as a significant effect).

Q8: What were the methodological flaws in the music study? Selective data reporting and testing until a significant p-value was achieved are critical indicators of methodological flaws.

Q9: How does p-hacking impact the interpretation of p-values? Suppose the methods generating p-values are compromised by p-hacking. In that case, the p-value loses its reliability, and the conclusions are questionable.

Q10: What is the role of p-values in statistical analysis? When used correctly, p-values help discern between results likely due to chance and those that signify a legitimate effect.