Richard Feynman Technique: A Pathway to Learning Anything in Data Analysis

You will learn the transformative Richard Feynman Technique for enhancing your data analysis skills.

Introduction

Richard Feynman, a luminary in physics, bestowed upon us his groundbreaking contributions to quantum mechanics and particle physics and an innovative approach to learning: the Richard Feynman Technique. Esteemed for his ability to convey highly complex scientific concepts in accessible language, Feynman’s legacy extends beyond theoretical physics into education, where his technique continues to enlighten and empower learners across diverse disciplines.

The Richard Feynman Technique is a particularly potent tool in statistics and data analysis. This method, characterized by its simplicity and effectiveness, aligns seamlessly with the intricate nature of data science. It aids professionals and students in deconstructing complex statistical theories and methodologies, rendering them understandable and applicable in real-world scenarios. The technique’s emphasis on clarity, comprehension, and the ability to teach back what one has learned makes it an invaluable asset in the ever-evolving and increasingly data-driven landscape of today’s world.

By integrating the Richard Feynman Technique into the study of data analysis, we unlock a pathway to deeper understanding, enhanced problem-solving skills, and more effective communication of statistical insights. This introduction sets the stage for exploring the profound impact of Feynman’s approach on the field of data science, shedding light on its principles and demonstrating its practical applications in the following sections.

Highlights

- The Richard Feynman Technique simplifies complex data concepts.

- Feynman’s method boosts retention in statistical learning.

- Apply Feynman’s approach to diverse data science problems.

- Feynman’s principles aid in clear data communication.

- The technique fosters a deep understanding of analytics.

Ad Title

Ad description. Lorem ipsum dolor sit amet, consectetur adipiscing elit.

The Richard Feynman Technique Explained

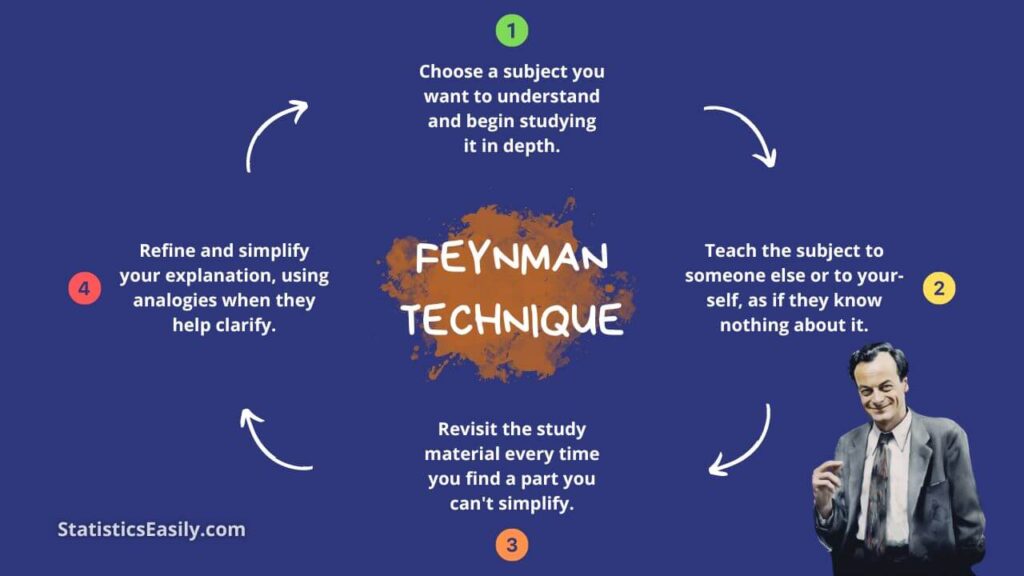

The Richard Feynman Technique, named after the renowned physicist, is a four-step method designed to enhance learning by transforming complex information into more straightforward, understandable concepts. This technique is particularly effective for mastering intricate statistical concepts in data analysis. Here, we break down its steps and discuss its application in statistics and data science.

Step 1: Break Down the Concept

Start by breaking down the complex statistical concept you wish to learn into its fundamental parts. For instance, if you’re tackling regression analysis, dissect it into its core components, such as the regression equation, variables, coefficients, and error terms.

Step 2: Teach It to Someone Else

Attempt to explain the concept in your own words as if you are teaching it to someone else. This could be a real person or an imaginary audience. The key is to use plain language, avoiding jargon as much as possible. If you struggle to explain a part, it’s a sign that you need a deeper understanding of that aspect.

Step 3: Identify Gaps and Go Back to the Source Material

As you teach, you likely encounter gaps in your understanding. When this happens, return to the source material to fill these gaps. This iterative process ensures a solid grasp of the concept.

Step 4: Simplify and Use Analogies

Finally, refine your explanation, simplifying complex parts and using analogies. For example, you might liken the concept of “bias” in statistics to a biased coin in probability, which consistently favors one outcome over another.

Application in Mastering Statistical Concepts

The Feynman Technique can be particularly transformative in data analysis, where professionals and students often grapple with multifaceted statistical theories and applications. This technique can demystify complex statistical models, algorithms, and data visualization techniques, making them more accessible and intuitive.

For instance, when applied to machine learning algorithms, this technique encourages learners to strip down algorithms to their basic operations, facilitating a deeper understanding of how they work and when they should be applied. Similarly, in the context of probability theories, the Feynman Technique can help elucidate the underlying principles and assumptions, enhancing one’s ability to use these theories in real-world data analysis scenarios.

By adhering to the Richard Feynman Technique, individuals in the field of data science can bolster their comprehension of complex statistical concepts and improve their capacity to communicate these concepts clearly and effectively, a crucial skill in collaborative data projects and educational settings.

This approach fosters a culture of continuous learning and teaching, a cornerstone of growth and innovation in the ever-evolving data science landscape. As we delve into the subsequent sections, we will explore specific applications of this technique in data science and statistics, underscoring its practicality and wide-ranging benefits.

Richard Feynman Technique Application in Data Science and Statistics

The Richard Feynman Technique offers a robust framework for understanding and applying complex data science and statistics concepts. Through its straightforward, step-by-step process, this method aids in learning and the practical application of statistical methods. Here, we explore how this technique can be leveraged to master critical data science concepts and present hypothetical scenarios illustrating its effectiveness.

Regression Analysis

Consider the task of learning regression analysis, a fundamental data science concept used for predicting a continuous outcome variable based on one or more predictor variables. The Feynman Technique would involve:

- Breaking down the regression equation into its components.

- Explaining the significance of coefficients.

- The role of the intercept.

- The assumptions underlying the model.

A hypothetical scenario might involve using this technique to clarify how changes in predictor variables influence the outcome, making the concept more tangible by relating it to real-world phenomena like housing prices or sales forecasting.

Probability Theories

Probability theories often form the backbone of statistical reasoning and decision-making in data science. One could use the Feynman Technique to dissect complex probability distributions, such as the normal or binomial distributions, into more understandable elements to grasp these theories. For instance, explaining the normal distribution by likening it to real-life scenarios, such as the heights of individuals in a population, can make the concept more relatable and easier to understand.

Machine Learning Algorithms

Machine learning algorithms can be daunting due to their intricate workings and extensive applications. The Feynman Technique can demystify these algorithms by encouraging a learner to describe, in simple terms, how an algorithm like a decision tree makes predictions by sequentially asking binary questions. A case study might involve a simple dataset predicting customer churn, where the technique is used to explain how the algorithm identifies patterns in customer behavior.

Hypothetical Scenario: Understanding Neural Networks

Inspired by the human brain’s architecture, neural networks can seem complex with their layers, neurons, and activation functions. Applying the Feynman Technique, one might start by likening a neuron to a light switch that activates based on specific inputs. This analogy can help conceptualize how individual neurons in a network work together to make complex decisions, thereby simplifying the learning process.

Statistical Hypothesis Testing

Statistical hypothesis testing can be riddled with complex terminology and concepts. Using the Feynman Technique, one could explain hypothesis testing by comparing it to a courtroom trial, where a hypothesis is considered innocent (true) until proven guilty (false) beyond a reasonable doubt. This scenario helps us understand the null hypothesis, alternative hypothesis, significance levels, and type I and II errors more intuitively.

By applying the Richard Feynman Technique to data science and statistics, learners can achieve a deeper understanding of complex concepts, enhance their problem-solving skills, and effectively communicate technical knowledge. This approach makes learning more engaging and meaningful, bridging the gap between theoretical understanding and practical application.

Richard Feynman Technique Benefits for Data Professionals

The Richard Feynman Technique provides many advantages for data professionals, significantly enhancing their analytical skills, comprehension, retention, and communication of complex ideas. This method, emphasizing clarity and simplicity, is particularly beneficial in the multifaceted field of data science and statistics.

Improved Comprehension

The first step of the Feynman Technique involves breaking down complex concepts into fundamental components, which fosters a deeper understanding of the subject matter. For data professionals, this means grasping intricate statistical models, data analysis techniques, and machine learning algorithms more intuitively. This enhanced comprehension aids in more effectively applying these concepts to solve real-world problems.

Enhanced Retention

Data professionals reinforce their learning and improve retention by teaching the concept to someone or oneself. This aspect of the technique ensures that the knowledge gained is understood and retained for long-term use. This is especially important in data science, where professionals continually build upon foundational knowledge to keep pace with evolving technologies and methodologies.

Effective Communication

The Feynman Technique encourages explaining complex concepts in simple, straightforward language. This skill is invaluable for data professionals, as it allows them to convey technical information to stakeholders, team members, and clients who may not have a background in data science. Communicating complex ideas effectively is crucial for collaboration, decision-making, and driving business strategies.

Problem-Solving Skills

Applying the Feynman Technique helps identify gaps in understanding, a critical step in problem-solving. Returning to the source material to fill these gaps, data professionals develop a more robust problem-solving approach, enabling them to tackle complex data-related challenges more confidently and efficiently.

Continuous Learning

The iterative nature of the Feynman Technique fosters a culture of continuous learning and improvement. Data professionals who practice this technique are well-equipped to keep up with the rapid advancements in data science and statistics, as they are adept at breaking down new concepts, learning them thoroughly, and applying them in their work.

The Richard Feynman Technique offers significant benefits for data professionals, enhancing their ability to understand, retain, and communicate complex statistical and data science concepts. By incorporating this technique into their learning and professional practices, data professionals can improve their analytical capabilities, contribute more effectively to their teams and projects, and advance their careers in the ever-evolving field of data science.

Practical Tips for Implementing the Feynman Technique

Integrating the Richard Feynman Technique into your study or work routine, especially within data analytics and statistical modeling, can significantly enhance your understanding and application of complex concepts. Here are some practical tips to effectively employ this technique in your data science practices:

Start with the Basics

- Identify the Core Concept: Identify the complex concept or topic you want to understand better. It could be a statistical model, a data analysis method, or a machine learning algorithm.

- Break It Down: Decompose the concept into its most basic components. For example, if you’re dealing with a complex algorithm, break it down into its steps or processes.

Create a Teaching Scenario

- Teach Out Loud: Explain the concept as if you are teaching it to someone else. This could be a real person, an imaginary audience, or an inanimate object. The key is to articulate your understanding verbally.

- Use Simple Language: Avoid technical jargon and explain the concept in the simplest terms possible. You will not understand it well enough if you can’t explain it simply.

Refine Your Understanding

- Identify Gaps: Pay attention to areas where you struggle to explain clearly. These are the gaps in your understanding.

- Review and Research: To fill these gaps, return to the original sources or seek additional resources. This might involve reading textbooks, watching tutorials, or discussing with peers.

Simplify and Use Analogies

- Use Analogies: Relate complex concepts to everyday experiences or familiar situations. For example, compare a neural network’s function to the human brain’s decision-making process.

- Simplify Your Explanations: Once you’ve filled the gaps in your understanding, revisit and simplify your explanation. Remove any remaining jargon and clarify complex parts.

Apply Practically

- Work on Real Problems: Apply the concept to real-world problems or datasets. Practical application will test your understanding and help solidify the concept in your mind.

- Iterate the Process: The Feynman Technique is iterative. As you apply concepts practically, new questions or gaps may emerge. Use these as opportunities to deepen your understanding.

Collaborate and Get Feedback

- Discuss with Peers: Discuss the concept with colleagues or peers. Teaching and debating ideas can offer new insights and reinforce your understanding.

- Seek Feedback: Ask for feedback on your explanations from experts and novices. This can help you gauge their clarity and identify areas for improvement.

Keep a Learning Journal

- Document Your Learning: Keep a journal of the concepts you’ve tackled using the Feynman Technique. Write down your initial explanations, the gaps you identified, and how you simplified the concept after filling those gaps.

- Review Regularly: Review your journal entries to reinforce your learning and reflect on your progress.

By following these tips and consistently applying the Richard Feynman Technique, data professionals can deepen their understanding of complex topics, enhance their ability to retain and recall information and improve their skills in communicating intricate ideas in a clear and accessible manner. This approach not only aids in personal mastery of data science concepts but also fosters a collaborative and learning-oriented environment within teams and organizations.

Feynman’s Legacy in Modern Data Education

Richard Feynman’s innovative learning and problem-solving approach continues to impact modern data science and statistics education profoundly. His technique, emphasizing simplicity, clarity, and depth of understanding, aligns perfectly with the needs of today’s data professionals who navigate complex and evolving datasets, algorithms, and analytical methods. This section explores how Feynman’s legacy lives in contemporary education and highlights tools and platforms inspired by his principles.

Embracing Complexity through Simplicity

The Richard Feynman Technique is foundational for demystifying intricate concepts in data science education. Educators and learners apply Feynman’s method to break down complex algorithms, statistical models, and big data challenges into their core components, making these topics more accessible and understandable. This approach not only aids in learning but also fosters a deeper appreciation for the elegance and efficiency of data science solutions.

Interactive Learning Platforms

Modern educational platforms embody the spirit of Feynman’s technique by offering interactive, user-friendly environments where complex ideas are taught through simple, engaging methods. Tools like Jupyter Notebooks, Kaggle, and DataCamp allow learners to experiment with code, visualize data, and apply statistical theories in real time, echoing Feynman’s belief in the power of hands-on learning and experimentation.

Collaborative Problem-Solving

The collaborative nature of platforms such as GitHub and Stack Overflow reflects Feynman’s emphasis on teaching and learning from others. These communities thrive on exchanging ideas, where explanations are simplified, questions are encouraged, and complex problems are tackled collectively. This approach mirrors Feynman’s collaborative approach to learning and discovery.

Visualization Tools

Visualization tools like Tableau, Power BI, and Matplotlib in Python make it easier to communicate complex data insights in simple, intuitive formats. These tools resonate with Feynman’s knack for using diagrams and analogies to explain complex physics phenomena, highlighting the importance of clear communication in data science.

Continuous Learning Resources

The abundance of MOOCs (Massive Open Online Courses) from platforms like Coursera, edX, and Udacity, offering data science and analytics courses, embodies Feynman’s lifelong learning ethos. These resources make advanced education accessible to all, encouraging a culture of continuous skill development and curiosity-driven exploration, much like Feynman’s educational journey.

Feynman’s Influence on Educational Content

Feynman’s legacy is also evident in the wealth of educational content that adopts his clear, engaging, and enthusiastic teaching style. Educators strive to emulate Feynman’s ability to inspire and illuminate complex topics with simplicity and joy, from textbooks and tutorials to blogs and YouTube channels dedicated to data science and statistics.

Richard Feynman’s approach to learning and problem-solving has left an indelible mark on data science and statistics education. His techniques encourage a deeper understanding of complex subjects, foster clear communication, and promote a collaborative, hands-on approach to learning. By incorporating these principles, modern educational tools and platforms continue to advance the field of data science, making it more accessible and engaging for learners worldwide.

Ad Title

Ad description. Lorem ipsum dolor sit amet, consectetur adipiscing elit.

Conclusion

This article delves into the Richard Feynman Technique‘s transformative power in data analysis, statistics, and broader data science education. From its roots in the ingenious mind of physicist Richard Feynman, this method has emerged as a beacon for those navigating the complexities of modern data-driven disciplines.

We explored how the Feynman Technique simplifies intricate data concepts, enhancing retention and enabling clear communication of sophisticated ideas. By breaking down complex topics into their fundamental parts, teaching these concepts in simple terms, identifying knowledge gaps, and using analogies, this technique fosters a deeper understanding and application of statistical models, algorithms, and data analysis methods.

Practical applications of the Feynman Technique in data science and statistics were highlighted, showcasing its versatility in demystifying topics such as regression analysis, probability theories, machine learning algorithms, and more. We also provided:

- Practical tips for integrating this approach into daily study and work routines.

- Emphasizing the value of simplicity.

- Continuous learning.

- Collaboration.

Furthermore, we acknowledged Feynman’s lasting impact on modern data education, illustrated by the plethora of interactive platforms, collaborative tools, and educational resources that embody his principles. These tools facilitate the learning process and encourage a hands-on, exploratory approach to data science, much like Feynman’s methods.

In conclusion, the Richard Feynman Technique is an invaluable asset for data professionals, educators, and students. It empowers individuals to tackle the complexities of data science with confidence, clarity, and a sense of curiosity that drives continual growth and innovation. As we navigate the ever-expanding landscape of data science, adopting the Feynman Technique can significantly enhance our ability to learn, apply, and communicate complex concepts effectively.

We encourage readers to embrace this technique in pursuing data science and statistical knowledge. By doing so, you honor the legacy of a brilliant mind and equip yourself with a powerful tool for mastering the art and science of data analysis.

Recommended Articles

Explore more insights and methods on our blog to elevate your data science journey. Dive deeper into techniques like the Richard Feynman Technique and beyond.

- 10 Revolutionary Techniques to Master Statistics and Data Analysis Effortlessly!

- The Hidden Truth: What They Never Told You About Statistics Education

- How Statistics Can Change Your Life: A Guide for Beginners

- Those Who Ignore Statistics Are Condemned to Reinvent it

- Statistics is the Grammar of Science

Frequently Asked Questions (FAQs)

Q1: What is the Richard Feynman Technique? It’s a powerful learning method that involves simplifying complex information into simpler terms and teaching it back to oneself. This enhances understanding and retention and is particularly effective in data analysis.

Q2: How can the Feynman Technique be applied in data analysis? By breaking down intricate statistical concepts into more understandable parts, practicing explaining these concepts in simple terms, and using analogies relevant to data science.

Q3: Why is the Feynman Technique beneficial for data professionals? It fosters a deeper understanding of complex data analysis techniques, improves problem-solving skills, and enhances the ability to communicate technical information.

Q4: Can the Feynman Technique improve data visualization skills? By applying Feynman’s principles, one can better understand and explain the rationale behind data visualization choices, leading to more impactful and insightful visual representations.

Q5: How does the Feynman Technique aid in learning new programming languages for data science? It involves deconstructing the language’s syntax and functionality into fundamental concepts and then reconstructing them to build a solid understanding and fluency.

Q6: What role does the Feynman Technique play in mastering machine learning algorithms? It helps demystify complex algorithms by encouraging learners to explain them simply, solidifying their understanding and application.

Q7: Can the Feynman Technique help in statistical hypothesis testing? It can simplify understanding various hypothesis tests by encouraging a clear and straightforward explanation of test assumptions, processes, and interpretations.

Q8: How can one implement the Feynman Technique in collaborative data projects? One way is to explain project goals, data analysis processes, and findings to team members in simple terms, thereby ensuring clarity and alignment among team members.

Q9: Does the Feynman Technique have a role in data ethics and privacy? Yes, it can be used to simplify and clarify the ethical considerations and privacy regulations in data science, making them more accessible and understandable to practitioners.

Q10: How can educators incorporate the Feynman Technique into the data science curriculum? They can encourage students to learn through teaching, simplify complex concepts, and apply the technique to real-world data problems, thus enhancing their learning experience.