When is P Value Significant? Understanding its Role in Hypothesis Testing

A p value is considered significant when it falls below a pre-determined significance level, typically 0.05. This indicates that the observed data is unlikely to occur by chance alone, suggesting evidence against the null hypothesis (H0).

When is p value significant?

A p value is considered significant when it is less than a pre-determined significance level, often set at 0.05. This threshold implies a 5% chance of incorrectly rejecting the null hypothesis (H0) when it is true (a Type I error). A significant p-value suggests that the observed data is unlikely to have occurred alone by chance, providing evidence against the (H0) null hypothesis in favor of the alternative hypothesis. However, it’s crucial to interpret p values cautiously, considering factors such as effect size, statistical power, and potential issues with multiple testing.

Highlights

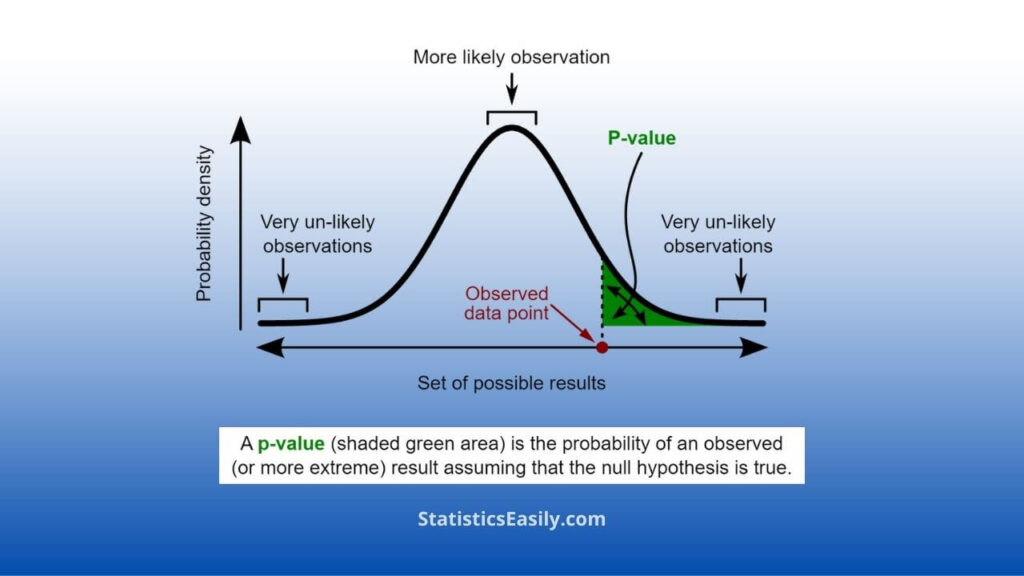

- The p-value represents the probability of observing data (or more extreme) results if the H0 (null hypothesis) is true.

- The significance level (alpha) is a threshold for determining p value significance, commonly set at 0.05.

- Type I error (false-positive) occurs when the null hypothesis is falsely rejected; the significance level represents this error probability.

- The p-value is influenced by a study’s sample size and effect size.

- Larger sample sizes can lead to significant p values even for small effect sizes.

Ad Title

Ad description. Lorem ipsum dolor sit amet, consectetur adipiscing elit.

What is the P-value?

The p value is a probability that quantifies the force of evidence against a null hypothesis in a statistical test. If the null hypothesis is true, it represents the probabilty of observing the data or more extreme results. The lower the p-value, the more powerful the evidence against the null hypothesis.

For a practical and easy-to-understand example, imagine a drug trial to test the effectiveness of a new medication. The null hypothesis states that the drug has no effect, while the alternative hypothesis claims that the drug is effective. After conducting the trial and analyzing the data, a p value of 0.02 is obtained. This means there is a 2% chance of observing the data or more extreme results if the drug has no effect. However, since the P-value is less than the standard significance level of 0.05, we reject the null hypothesis and conclude that the drug is effective.

What is the Significance Level (α)?

The significance level, denoted by alpha (α), is a pre-determined threshold determining when a p-value is significant. It represents the probability of making a Type I error, which occurs when the H0 (null hypothesis) is incorrectly rejected when it is true. Commonly used significance levels are 0.05, 0.01, and 0.001, corresponding to a 5%, 1%, and 0.1% chance of making a Type I error, respectively.

In hypothesis testing, if the p value is less than or equal to the chosen significance level (α), the null hypothesis (H0) is rejected, and the results are deemed statistically significant. The choice of the significance level depends on the specific field of study, the nature of the research question, and the consequences of making a Type I error. Lower significance levels are generally used when the cost of making a Type I error is high. At the same time, higher levels may be applied in exploratory research where Type I errors are less consequential.

P-value x Sample size x Effect size

The p value is influenced by a study’s sample size and the effect size. Sample size refers to the number of observations or participants in the study. In contrast, effect size represents the magnitude of the relationship or difference between groups under investigation.

Sample size: As we increase the sample size, the test’s statistical power also increases, making it more likely to detect true effects and reject the null hypothesis. Even minor effects can result in significant p-values with larger sample sizes. Conversely, with smaller sample sizes, the test may fail to detect true effects, leading to non-significant p values.

Effect size: The effect size reflects the practical importance of the observed relationship or difference. A larger effect size implies a stronger relationship or more substantial difference between groups. P-values can be significant even for small effect sizes, especially in studies with large sample sizes. Therefore, it is crucial to consider the effect size alongside the p value when interpreting the results of a study.

Common Misconceptions About P-values

P-value as the probability of the null hypothesis: A common misunderstanding is that the p value represents the probability that the null hypothesis (H0) is true. However, the p-value quantifies the probability of observing the data or more extreme results if the null hypothesis (H0) is true, not the probability of the null hypothesis itself.

P-value as a measure of effect size: Some believe a lower p value indicates a larger effect size, but this is false. The p-value depends on both the effect size and the sample size; it is possible to have a small effect size with a significant p-value when the sample size is large.

Equating non-significance with equivalence: A non-significant p-value does not mean there is no effect or the null hypothesis is true. It merely indicates that there is insufficient evidence to reject the null hypothesis. It could be due to small sample size, low statistical power, or other factors.

Using a fixed significance level for all studies: While 0.05 is a typical significance level, it is inappropriate for every study. Therefore, the chosen significance level should depend on the context of the research and the consequences of making a Type I error.

Ignoring the context and practical significance: Focusing solely on the p value can lead to overlooking the practical importance of the observed effects. Therefore, it is essential to consider the effect size, confidence intervals, and the real-world implications of the findings alongside the p-value.

Ad Title

Ad description. Lorem ipsum dolor sit amet, consectetur adipiscing elit.

Recommended reading:

- When The P-Value is Less Than 0.05: Understanding Statistical Significance

- Exploring the Scenario when p is 0.051 or higher (p ≥ 0.051)

- A Comprehensive Guide to Hypotheses Tests in Statistics

- P-values and significance (External Link)

- What Does The P-Value Mean? PART 1

- What Does The P-Value Mean? PART 2

- When is P Value Significant? (Story)

- Exploring P-Values (Story)

FAQ: When is p value significant?

A p value measures the strength of evidence against the null hypothesis by calculating the probability of obtaining the observed (or even more extreme) results, assuming the null hypothesis is true.

A p value is considered significant when it is less than or equal to a pre-determined significance level (commonly 0.05), indicating sufficient evidence to reject the null hypothesis.

The significance level (alpha) is a threshold for determining when a p-value is significant, commonly set at 0.05, representing the probability of a Type I error.

Larger sample sizes increase statistical power, making it more likely to detect true effects and obtain significant p-values even for small effect sizes.

The p-value depends on effect size and sample size; a significant p value can occur with small effect sizes when the sample size is large.

A Type I error (false-positive) occurs when the null hypothesis (H0) is falsely rejected when it is true; the significance level represents the probability of making a Type I error.

No, a significant p value does not guarantee a large or practically important effect; it’s crucial to consider effect size alongside the p-value.

No, a non-significant p-value indicates insufficient evidence to reject the H0 (null hypothesis). Still, it does not prove it or demonstrate no effect.

The significance level should be chosen based on the research context, field of study, and the consequences of making a Type I error.

Considering effect size, confidence intervals, and real-world implications alongside p values provides a more comprehensive understanding of the study results.