Data Transformations for Normality: Essential Techniques

You will learn the pivotal role of data transformations for normality in achieving accurate and reliable statistical insights.

Introduction

Pursuing truth in data analysis beckons for precision, clarity, and an unwavering commitment to authenticity. These ideals are deeply rooted in understanding and applying data transformations for normality. This article serves as a beacon for statisticians, data scientists, and researchers, guiding them through statistical data’s labyrinth to unveil the hidden core truths. By embarking on this journey, readers are equipped with the knowledge to perform these transformations and grasp their profound significance in the broader context of statistical analysis, ensuring data integrity and the reliability of ensuing interpretations. Herein lies a comprehensive exploration, meticulously crafted to illuminate the path toward achieving normality in data, a foundational pillar in the quest for genuine insights and the revelation of the inherent beauty in data’s truth.

Highlights

- Log transformation can significantly reduce skewness in data.

- Box-Cox transformation optimizes normality across diverse datasets.

- Normality tests guide the choice of data transformation methods.

- Transformed data meet assumptions for parametric statistical tests.

- Visualization tools are vital in assessing transformation effectiveness.

Ad Title

Ad description. Lorem ipsum dolor sit amet, consectetur adipiscing elit.

The Essence of Normality in Data

Theoretical Foundations

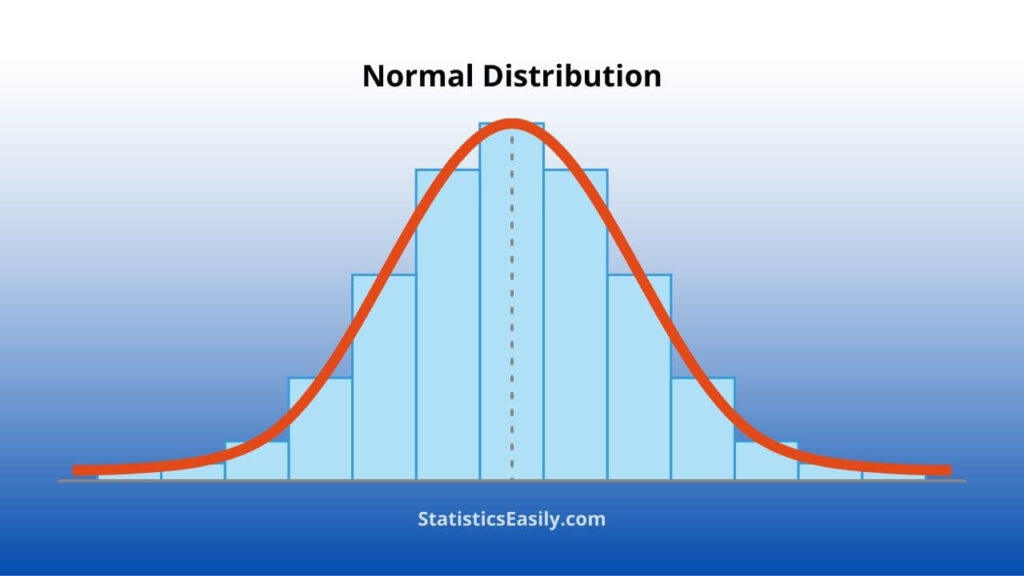

At the core of statistical analysis lies the principle of normality. This concept denotes data distributions that are symmetric and bell-shaped, centered around a mean value. This fundamental aspect of data is not merely a mathematical convenience but a reflection of the inherent patterns and truths that nature and human activities often exhibit. In statistics, normality is not just an assumption but a bridge to deeper insights, enabling the application of many statistical tests and models that assume data follow this distribution. The significance of data transformations for normality stems from the need to align real-world data with this idealized model, thereby unlocking the potential for genuine insights and more reliable conclusions. It’s a testament to the enduring quest for truth in data interpretation, ensuring that findings are statistically significant and reflect the underlying phenomena.

Practical Relevance

The quest for normality transcends theoretical considerations, manifesting in tangible benefits across various domains of research and decision-making. In fields as diverse as healthcare, economics, engineering, and social sciences, achieving data normality through transformations is a statistical exercise and a prerequisite for extracting valid and actionable insights. For instance, in healthcare, accurate analysis of patient data can lead to better treatment plans and outcomes. In economics, it can inform policy decisions that affect millions. By transforming data to achieve normality, researchers and practitioners can apply a broader range of statistical tests, enhancing the robustness and validity of their findings. Therefore, this process contributes significantly to the good in research and decision-making, facilitating evidence-based practices that can benefit society. It reflects how statistical principles, when applied thoughtfully, can contribute to the common good, turning data into a tool for positive change and a deeper understanding of the world.

—

Data Transformations for Normality: Techniques Explored

Common Transformations

In achieving normality in data distributions, several data transformations for normality stand out for their efficacy and widespread applicability. These techniques are powerful tools to reshape and align data more closely with the normal distribution, a fundamental prerequisite for many statistical analyses.

Log Transformation: A cornerstone method, particularly effective for data that exhibit exponential growth or significant right skewness. By applying the natural logarithm to each data point, the log transformation can substantially reduce skewness, bringing the data closer to normality. This transformation is especially prevalent in financial data analysis, where variables span several orders of magnitude.

Square Root Transformation: Applied to right-skewed data, this technique is less potent than the log transformation but still effective in reducing variability and skewness. It is beneficial for count data, where the variance increases with the mean.

Box-Cox Transformation: A more versatile approach encompassing a family of power transformations. The Box-Cox transformation includes parameters optimized to achieve the best approximation of normality. It requires positive data and is widely used in scenarios where the optimal transformation is not immediately apparent.

Each of these transformations has specific contexts and conditions under which it is most effective. Their applicability hinges on the nature of the data, necessitating a thorough initial analysis to diagnose the extent and type of deviation from normality.

Advanced Techniques

For seasoned statisticians and data scientists, more sophisticated methods provide nuanced ways to address complex non-normality issues:

Johnson Transformation: An adaptable system of transformations capable of handling a broader range of data shapes and sizes, including bounded and unbounded data. This method selects from a family of transformations to best fit the data to a normal distribution.

Yeo-Johnson Transformation: An extension of the Box-Cox transformation that can be applied to both positive and negative data. This flexibility makes it a valuable tool in datasets where negative values are meaningful and cannot be simply offset or removed.

Quantile Normalization: Often used in genomic data analysis, this technique involves aligning the distribution of data points to a reference normal distribution, effectively standardizing data across different samples or experiments.

The choice between these advanced techniques and more common transformations depends on the data’s characteristics and the specific requirements of the subsequent analysis. Each method has strengths and limitations, and detailed exploratory data analysis and consideration of the goals should guide the decision.

In employing these data transformations for normality, it is crucial to maintain a clear understanding of the transformation’s impact on the data and the interpretation of results. The transformed data may adhere to the assumptions of parametric tests. However, the original meaning of the data points, and thus the interpretability, can be altered. Therefore, a careful balance must be struck between achieving statistical prerequisites and preserving the integrity and interpretability of the data.

Step-by-Step Guide

Pre-Transformation Analysis

Before diving into transformations, it’s critical to assess the need through a thorough analysis. This begins with:

1. Visual Inspection: Use plots such as histograms, Q-Q (quantile-quantile) plots, and box plots to assess the distribution of your data visually.

2. Statistical Tests: Leverage tests like the Shapiro-Wilk or Kolmogorov-Smirnov to quantitatively test for normality. These tests provide a p-value, indicating whether the data deviate significantly from a normal distribution.

Transformation Process in R and Python

Here’s a concise guide to applying common data transformations using R and Python, two of the most prevalent tools in statistical analysis and data science.

Log Transformation:

- R: transformed_data <- log(original_data)

- Python (using NumPy): transformed_data = np.log(original_data)

Square Root Transformation:

- R: transformed_data <- sqrt(original_data)

- Python (using NumPy): transformed_data = np.sqrt(original_data)

Box-Cox Transformation:

- R (using the MASS package): transformed_data <- MASS::boxcox(original_data + 1) # Adding 1 to handle zero values

- Python (using SciPy): transformed_data, best_lambda = scipy.stats.boxcox(original_data + 1) # Adding 1 for the same reason as above

Remember, the choice of transformation depends on your data’s characteristics and the distribution you aim to achieve. Always add a small constant to the data before applying log or Box-Cox transformations to avoid undefined values for zero or negative data points.

Post-Transformation Evaluation

After transforming your data, reassess normality using the same visual and statistical methods applied in the pre-transformation analysis. This will help you determine the effectiveness of the transformation. Additionally, compare the results of your statistical analyses (e.g., regression, ANOVA) before and after the transformation to understand the impact on your conclusions.

Visual Reassessment: Generate the same plots as in the pre-transformation analysis to visually inspect the transformed data’s distribution.

Statistical Tests Reapplication: Reapply Shapiro-Wilk or Kolmogorov-Smirnov tests to the transformed data to quantitatively assess normality.

Case Studies and Applications

Real-World Examples

Applying data transformations for normality is theoretical and has proven pivotal in numerous real-world scenarios. For instance, in a landmark study on the effects of environmental factors on plant growth, researchers faced data that was heavily skewed due to a few outlier plants exhibiting exceptional growth. By applying a log transformation, they normalized the data, revealing significant insights into the average effects of various treatments obscured by outliers.

In another case, a financial analyst used the Box-Cox transformation to stabilize the variance of stock returns over time, allowing for more accurate predictions and risk assessments. This transformation corrected the heteroscedasticity in the financial time series data, improving model fit and forecasting reliability.

Sector-Specific Applications

Healthcare: In clinical trials, data transformations are often employed to normalize response variables, enabling parametric statistical tests to assess treatment efficacy. For example, the log transformation has been used to normalize data on patient response times to a new medication, facilitating the identification of statistically significant improvements over the control group.

Finance: Financial data, such as stock prices and returns, often exhibit skewness and heavy tails. Transformations, particularly the Box-Cox and log transformations, are regularly used to model such data more effectively, aiding in developing more reliable economic models and investment strategies.

Engineering: Engineers use data transformations to normalize data from experiments and simulations, ensuring accurate analyses. For example, in quality control, the square root transformation is applied to count data, like the number of defects per batch, to stabilize the variance and improve the sensitivity of control charts.

Ad Title

Ad description. Lorem ipsum dolor sit amet, consectetur adipiscing elit.

Conclusion

In navigating the intricate paths of statistical analysis, the discourse on data transformations for normality has illuminated a path toward unraveling the intrinsic beauty and underlying truths within data. This exploration, grounded in precision and authenticity, equips us with profound insights and methodologies to elevate our understanding and application of statistical practices. From the foundational concepts to the practical applications across various sectors, we’ve journeyed through the essence of normality, delved into transformative techniques, and witnessed their profound impact in real-world scenarios.

Recommended Articles

Explore our articles on statistical analysis and data science to discover more profound insights and techniques. Enhance your knowledge to illuminate the truth in your data.

- Generalized Linear Model (GAM) Distribution and Link Function Selection Guide

- Left-Skewed and Right-Skewed Distributions: Understanding Asymmetry

- Common Mistakes to Avoid in One-Way ANOVA Analysis

- Exploring Right-Skewed Histograms in Data Analysis

- Gaussian Distribution – an overview (External)

- Is PSPP A Free Alternative To SPSS?

Frequently Asked Questions (FAQs)

Q1: Why is normality essential in data analysis? Normality is crucial for the validity of many statistical tests that assume data distribution is normal, ensuring accurate results.

Q2: What is a log transformation? It’s a technique to reduce skewness in positively skewed data by applying the natural logarithm to each data point.

Q3: How does the Box-Cox transformation work? Box-Cox transformation finds a parameter λ best to normalize data, applicable to positive, continuous variables.

Q4: When should I apply data transformation? Apply transformations when your data significantly deviates from normality, affecting the validity of statistical tests.

Q5: Can I reverse data transformations? Yes, transformations like log and Box-Cox are reversible, allowing the return to the original data scale for interpretation.

Q6: Are there data that shouldn’t be transformed? Data without variance or negative values may not be suitable for certain transformations, like log or Box-Cox.

Q7: What role do normality tests play in data transformation? Normality tests, like Shapiro-Wilk, help determine if your data requires transformation to meet normality assumptions.

Q8: How does normality affect machine learning models? Normality in features can improve model performance, especially in algorithms assuming normally distributed data.

Q9: Can data transformation improve outlier resistance? Yes, transformations can reduce the influence of outliers by normalizing data distribution, leading to more robust analyses.

Q10: What is the importance of post-transformation evaluation? Evaluating data post-transformation ensures the transformation achieved normality, validating subsequent statistical tests.